Contents

Single point of failure is referred to a component in a system where it’s failure produce an outage of the whole system. In the Information Technology sector, a Cluster allows to prepare the system to avoid the risk of this kind of situations which is composed by at least 2 synchronized nodes, that permits that when one of the node fails the other takes the responsibility to take care of the services.

Why a Cluster

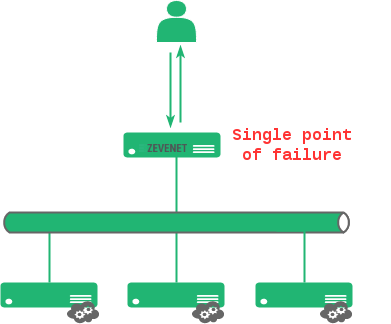

Load balancing is a task to provide a single network service from multiple servers, sometimes known as a server farm.

The load balancer is usually a software program embedded in a virtual or physical hardware that is listening on the port where external clients connect to access services. The load balancer forwards requests to one of the backend servers, which usually replies to the load balancer. This allows the load balancer to reply to the client without the client ever knowing about the internal separation of functions. It can automatically provide the amount of capacity needed to respond to any increase or decrease of application traffic. In the next diagram we can see a typical network configuration for serving a web application.

It also prevents clients from contacting with back-ends directly, which may have security benefits by hiding the structure of the internal network and preventing attacks on the kernel network stack or unrelated services running on other ports, but… What happens if the load balancer fails?

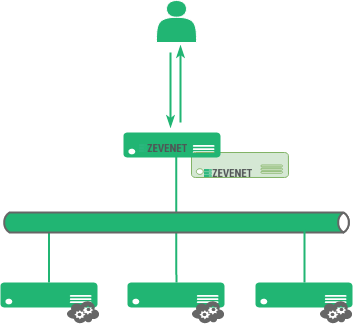

Load balancing gives the IT team a chance to achieve a significantly higher fault tolerance. That is the reason why the load balancer itself does not become a single point of failure. Load balancers must be implemented in high availability cluster, i.e., a pair of appliances. The same example with an HA cluster for load balancing can be:

We have seen the importance of setting an HA cluster. Now we are going to see how to configure it with Zevenet Load Balancer.

Cluster Requirements

When configuring a new Cluster ensure that both nodes are using the same kernel version (ie. same model of appliance).

- Ensure that ntp service is configured and working fine in both future nodes of the cluster.

- Ensure that the DNS are configured and working fine in both future nodes of the cluster.

- Ensure that there is ping between the future cluster members through the used interface for the cluster (i.e.: IP of eth0 in node1 and IP of eth0 in node2).

- It is recommended to dedicate a interface for the cluster service.

- Cluster service uses multicast communication and VRRP packages in unicast mode, ensure that this kind of packages are allowed in your switches.

- Once the cluster service is enabled the farms and Virtual Interfaces on the slave node will be deleted, and the configuration of node master will be replicated to slave.

Cluster Configuration for Zevenet Community Edition

In order to do a proper cluster configuration for community edition v5 or higher please follow this procedure:

How to configure a cluster in Zevenet Community Edition v.5.0

Cluster Configuration for Zevenet Enterprise Edition

Zevenet Enterprise Edition Cluster service is fully integrated in the Zevenet Core, it can be managed and configure easily as follows:

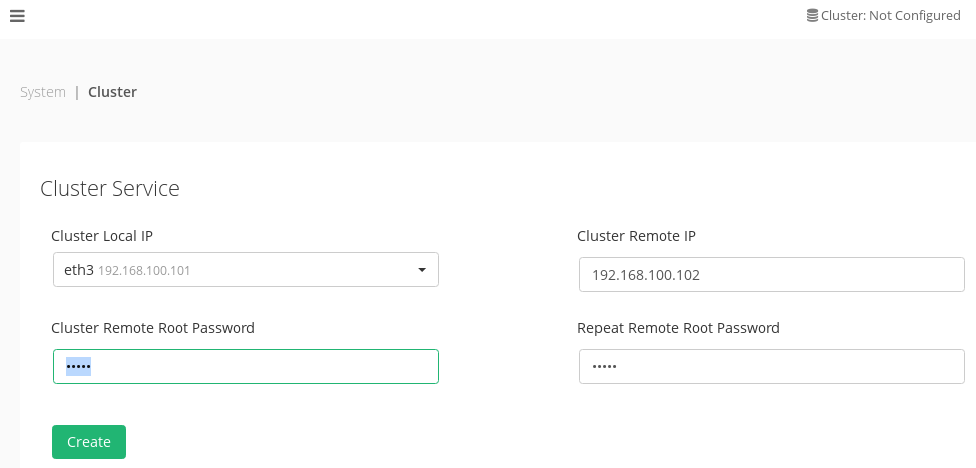

- Into the web gui interface, go to lateral menu “SYSTEM > Cluster”.

- Press in select field “Cluster Local IP”, and select the dedicates interface for cluster service

- Enter the IP of secondary node in the same network interface you have selected in first node

- Type the slave root password in the field “Cluster Remote Root Password” and retype the same password in the field “Cluster Remote Root Password”

- Now click “Create” and the cluster configuration process will start

.

If you need further information, please visit the article Configure the cluster service